14.3 Sensitivity Analysis¶

Given an optimization problem it is often useful to obtain information about how the optimal objective value changes when the problem parameters are perturbed. E.g, assume that a bound represents the capacity of a machine. Now, it may be possible to expand the capacity for a certain cost and hence it is worthwhile knowing what the value of additional capacity is. This is precisely the type of questions the sensitivity analysis deals with.

Analyzing how the optimal objective value changes when the problem data is changed is called sensitivity analysis.

References

The book [Chvatal83] discusses the classical sensitivity analysis in Chapter 10 whereas the book [RTV97] presents a modern introduction to sensitivity analysis. Finally, it is recommended to read the short paper [Wal00] to avoid some of the pitfalls associated with sensitivity analysis.

Warning

Currently, sensitivity analysis is only available for continuous linear optimization problems. Moreover, MOSEK can only deal with perturbations of bounds and objective function coefficients.

14.3.1 Sensitivity Analysis for Linear Problems¶

14.3.1.1 The Optimal Objective Value Function¶

Assume that we are given the problem

and we want to know how the optimal objective value changes as \(l^c_i\) is perturbed. To answer this question we define the perturbed problem for \(l^c_i\) as follows

where \(e_i\) is the \(i\)-th column of the identity matrix. The function

shows the optimal objective value as a function of \(\beta\). Please note that a change in \(\beta\) corresponds to a perturbation in \(l_i^c\) and hence (14.4) shows the optimal objective value as a function of varying \(l^c_i\) with the other bounds fixed.

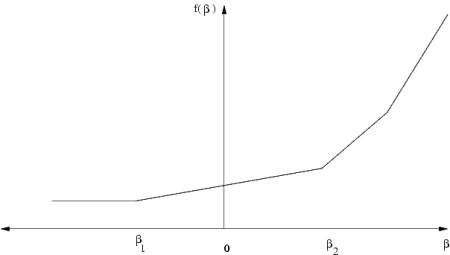

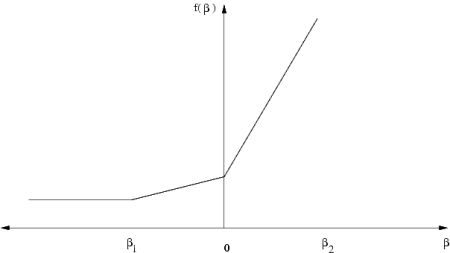

It is possible to prove that the function (14.4) is a piecewise linear and convex function, i.e. its graph may look like in Fig. 14.1 and Fig. 14.2.

Fig. 14.1 \(\beta =0\) is in the interior of linearity interval.¶

Fig. 14.2 \(\beta =0\) is a breakpoint.¶

Clearly, if the function \(f_{l^c_i}(\beta )\) does not change much when \(\beta\) is changed, then we can conclude that the optimal objective value is insensitive to changes in \(l_i^c\). Therefore, we are interested in the rate of change in \(f_{l^c_i}(\beta)\) for small changes in \(\beta\) — specifically the gradient

which is called the shadow price related to \(l^c_i\). The shadow price specifies how the objective value changes for small changes of \(\beta\) around zero. Moreover, we are interested in the linearity interval

for which

Since \(f_{l^c_i}\) is not a smooth function \(f^\prime_{l^c_i}\) may not be defined at \(0\), as illustrated in Fig. 14.2. In this case we can define a left and a right shadow price and a left and a right linearity interval.

The function \(f_{l^c_i}\) considered only changes in \(l^c_i\). We can define similar functions for the remaining parameters of the \(z\) defined in (14.3) as well:

Given these definitions it should be clear how linearity intervals and shadow prices are defined for the parameters \(u^c_i\) etc.

14.3.1.1.1 Equality Constraints¶

In MOSEK a constraint can be specified as either an equality constraint or a ranged constraint. If some constraint \(e_i^c\) is an equality constraint, we define the optimal value function for this constraint as

Thus for an equality constraint the upper and the lower bounds (which are equal) are perturbed simultaneously. Therefore, MOSEK will handle sensitivity analysis differently for a ranged constraint with \(l^c_i = u^c_i\) and for an equality constraint.

14.3.1.2 The Basis Type Sensitivity Analysis¶

The classical sensitivity analysis discussed in most textbooks about linear optimization, e.g. [Chvatal83], is based on an optimal basis. This method may produce misleading results [RTV97] but is computationally cheap. This is the type of sensitivity analysis implemented in MOSEK.

We will now briefly discuss the basis type sensitivity analysis. Given an optimal basic solution which provides a partition of variables into basic and non-basic variables, the basis type sensitivity analysis computes the linearity interval \([\beta_1,\beta_2]\) so that the basis remains optimal for the perturbed problem. A shadow price associated with the linearity interval is also computed. However, it is well-known that an optimal basic solution may not be unique and therefore the result depends on the optimal basic solution employed in the sensitivity analysis. If the optimal objective value function has a breakpoint for \(\beta = 0\) then the basis type sensitivity method will only provide a subset of either the left or the right linearity interval.

In summary, the basis type sensitivity analysis is computationally cheap but does not provide complete information. Hence, the results of the basis type sensitivity analysis should be used with care.

14.3.1.3 Example: Sensitivity Analysis¶

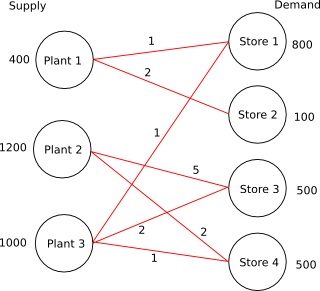

As an example we will use the following transportation problem. Consider the problem of minimizing the transportation cost between a number of production plants and stores. Each plant supplies a number of goods and each store has a given demand that must be met. Supply, demand and cost of transportation per unit are shown in Fig. 14.3.

Fig. 14.3 Supply, demand and cost of transportation.¶

If we denote the number of transported goods from location \(i\) to location \(j\) by \(x_{ij}\), problem can be formulated as the linear optimization problem of minimizing

subject to

The sensitivity parameters are shown in Table 14.1 and Table 14.2.

Con. |

\(\beta_1\) |

\(\beta_2\) |

\(\sigma_1\) |

\(\sigma_2\) |

|---|---|---|---|---|

\(1\) |

\(-300.00\) |

\(0.00\) |

\(3.00\) |

\(3.00\) |

\(2\) |

\(-700.00\) |

\(+\infty\) |

\(0.00\) |

\(0.00\) |

\(3\) |

\(-500.00\) |

\(0.00\) |

\(3.00\) |

\(3.00\) |

\(4\) |

\(-0.00\) |

\(500.00\) |

\(4.00\) |

\(4.00\) |

\(5\) |

\(-0.00\) |

\(300.00\) |

\(5.00\) |

\(5.00\) |

\(6\) |

\(-0.00\) |

\(700.00\) |

\(5.00\) |

\(5.00\) |

\(7\) |

\(-500.00\) |

\(700.00\) |

\(2.00\) |

\(2.00\) |

Var. |

\(\beta_1\) |

\(\beta_2\) |

\(\sigma_1\) |

\(\sigma_2\) |

\(x_{11}\) |

\(-\infty\) |

\(300.00\) |

\(0.00\) |

\(0.00\) |

\(x_{12}\) |

\(-\infty\) |

\(100.00\) |

\(0.00\) |

\(0.00\) |

\(x_{23}\) |

\(-\infty\) |

\(0.00\) |

\(0.00\) |

\(0.00\) |

\(x_{24}\) |

\(-\infty\) |

\(500.00\) |

\(0.00\) |

\(0.00\) |

\(x_{31}\) |

\(-\infty\) |

\(500.00\) |

\(0.00\) |

\(0.00\) |

\(x_{33}\) |

\(-\infty\) |

\(500.00\) |

\(0.00\) |

\(0.00\) |

\(x_{34}\) |

\(-0.000000\) |

\(500.00\) |

\(2.00\) |

\(2.00\) |

Var. |

\(\beta_1\) |

\(\beta_2\) |

\(\sigma_1\) |

\(\sigma_2\) |

|---|---|---|---|---|

\(c_1\) |

\(-\infty\) |

\(3.00\) |

\(300.00\) |

\(300.00\) |

\(c_2\) |

\(-\infty\) |

\(\infty\) |

\(100.00\) |

\(100.00\) |

\(c_3\) |

\(-2.00\) |

\(\infty\) |

\(0.00\) |

\(0.00\) |

\(c_4\) |

\(-\infty\) |

\(2.00\) |

\(500.00\) |

\(500.00\) |

\(c_5\) |

\(-3.00\) |

\(\infty\) |

\(500.00\) |

\(500.00\) |

\(c_6\) |

\(-\infty\) |

\(2.00\) |

\(500.00\) |

\(500.00\) |

\(c_7\) |

\(-2.00\) |

\(\infty\) |

\(0.00\) |

\(0.00\) |

Examining the results from the sensitivity analysis we see that for constraint number \(1\) we have \(\sigma_1 = 3\) and \(\beta_1 = -300,\ \beta_2=0\).

If the upper bound on constraint \(1\) is decreased by

then the optimal objective value will increase by the value

14.3.2 Sensitivity Analysis with MOSEK¶

MOSEK provides the functions MSK_primalsensitivity and MSK_dualsensitivity for performing sensitivity analysis. The code in Listing 14.2 gives an example of its use.

here to download.¶#include <stdio.h>

#include "mosek.h" /* Include the MOSEK definition file. */

static void MSKAPI printstr(void *handle,

const char str[])

{

printf("%s", str);

} /* printstr */

int main(int argc, char *argv[])

{

const MSKint32t numcon = 7,

numvar = 7;

MSKint32t i, j;

MSKboundkeye bkc[] = {MSK_BK_UP, MSK_BK_UP, MSK_BK_UP, MSK_BK_FX,

MSK_BK_FX, MSK_BK_FX, MSK_BK_FX

};

MSKboundkeye bkx[] = {MSK_BK_LO, MSK_BK_LO, MSK_BK_LO,

MSK_BK_LO, MSK_BK_LO, MSK_BK_LO, MSK_BK_LO

};

MSKint32t ptrb[] = {0, 2, 4, 6, 8, 10, 12};

MSKint32t ptre[] = {2, 4, 6, 8, 10, 12, 14};

MSKint32t sub[] = {0, 3, 0, 4, 1, 5, 1, 6, 2, 3, 2, 5, 2, 6};

MSKrealt blc[] = { -MSK_INFINITY, -MSK_INFINITY, -MSK_INFINITY, 800, 100, 500, 500};

MSKrealt buc[] = {400, 1200, 1000, 800, 100, 500, 500};

MSKrealt c[] = {1.0, 2.0, 5.0, 2.0, 1.0, 2.0, 1.0};

MSKrealt blx[] = {0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0};

MSKrealt bux[] = {MSK_INFINITY, MSK_INFINITY, MSK_INFINITY, MSK_INFINITY,

MSK_INFINITY, MSK_INFINITY, MSK_INFINITY

};

MSKrealt val[] = {1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0};

MSKrescodee r;

MSKenv_t env;

MSKtask_t task;

/* Create mosek environment. */

r = MSK_makeenv(&env, NULL);

if (r == MSK_RES_OK)

{

/* Make the optimization task. */

r = MSK_makeemptytask(env, &task);

if (r == MSK_RES_OK)

{

/* Directs the log task stream to the user

specified procedure 'printstr'. */

MSK_linkfunctotaskstream(task, MSK_STREAM_LOG, NULL, printstr);

MSK_echotask(task,

MSK_STREAM_MSG,

"Defining the problem data.\n");

}

/* Append the constraints. */

if (r == MSK_RES_OK)

r = MSK_appendcons(task, numcon);

/* Append the variables. */

if (r == MSK_RES_OK)

r = MSK_appendvars(task, numvar);

/* Put C. */

if (r == MSK_RES_OK)

r = MSK_putcfix(task, 0.0);

if (r == MSK_RES_OK)

r = MSK_putcslice(task, 0, numvar, c);

/* Put constraint bounds. */

if (r == MSK_RES_OK)

r = MSK_putconboundslice(task, 0, numcon, bkc, blc, buc);

/* Put variable bounds. */

if (r == MSK_RES_OK)

r = MSK_putvarboundslice(task, 0, numvar, bkx, blx, bux);

/* Put A. */

if (r == MSK_RES_OK)

r = MSK_putacolslice(task, 0, numvar, ptrb, ptre, sub, val);

if (r == MSK_RES_OK)

r = MSK_putobjsense(task, MSK_OBJECTIVE_SENSE_MINIMIZE);

if (r == MSK_RES_OK)

r = MSK_optimizetrm(task, NULL);

if (r == MSK_RES_OK)

{

/* Analyze upper bound on c1 and the equality constraint on c4 */

MSKint32t subi[] = {0, 3};

MSKmarke marki[] = {MSK_MARK_UP, MSK_MARK_UP};

/* Analyze lower bound on the variables x12 and x31 */

MSKint32t subj[] = {1, 4};

MSKmarke markj[] = {MSK_MARK_LO, MSK_MARK_LO};

MSKrealt leftpricei[2];

MSKrealt rightpricei[2];

MSKrealt leftrangei[2];

MSKrealt rightrangei[2];

MSKrealt leftpricej[2];

MSKrealt rightpricej[2];

MSKrealt leftrangej[2];

MSKrealt rightrangej[2];

r = MSK_primalsensitivity(task,

2,

subi,

marki,

2,

subj,

markj,

leftpricei,

rightpricei,

leftrangei,

rightrangei,

leftpricej,

rightpricej,

leftrangej,

rightrangej);

printf("Results from sensitivity analysis on bounds:\n");

printf("For constraints:\n");

for (i = 0; i < 2; ++i)

printf("leftprice = %e, rightprice = %e,leftrange = %e, rightrange =%e\n",

leftpricei[i], rightpricei[i], leftrangei[i], rightrangei[i]);

printf("For variables:\n");

for (i = 0; i < 2; ++i)

printf("leftprice = %e, rightprice = %e,leftrange = %e, rightrange =%e\n",

leftpricej[i], rightpricej[i], leftrangej[i], rightrangej[i]);

}

if (r == MSK_RES_OK)

{

MSKint32t subj[] = {2, 5};

MSKrealt leftprice[2];

MSKrealt rightprice[2];

MSKrealt leftrange[2];

MSKrealt rightrange[2];

r = MSK_dualsensitivity(task,

2,

subj,

leftprice,

rightprice,

leftrange,

rightrange

);

printf("Results from sensitivity analysis on objective coefficients:\n");

for (i = 0; i < 2; ++i)

printf("leftprice = %e, rightprice = %e,leftrange = %e, rightrange =%e\n",

leftprice[i], rightprice[i], leftrange[i], rightrange[i]);

}

MSK_deletetask(&task);

}

MSK_deleteenv(&env);

printf("Return code: %d (0 means no error occured.)\n", r);

return (r);

} /* main */